Cloud Spanner Region Configuration for Topology Service

| Status | Authors | Coach | DRIs | Owning Stage | Created |

|---|---|---|---|---|---|

a_richter

|

devops tenant-scale | 2025-05-08 |

The Topology Service in the Cells architecture requires a highly available database with strong consistency guarantees. Cloud Spanner offers both regional and multi-regional configurations. For our implementation, we need to determine the optimal configuration that balances performance, disaster recovery capabilities, and cost.

Key considerations:

- Client side latency example HTTP Router -> Topology Service.

- Server side latency inter spanner communication such as the Paxos replication.

- Disaster recovery needs and resilience against regional outages.

- Cost implications for different configurations.

- SLAs provided by Google.

Multi-regional configurations under consideration:

nam3: Read-write replicas in east of US, and optional replicas in Europe and Asianam11: Read-write replicas across multiple North American regionsnam-eur-asia3: Read-write across North America, and read only across Europe and Asia

Client Side Latency Testing

We tested the latency between various GCP regions and the current Topology Service hosted in us-east1/us-central1 to mimic our production environment where HTTP Router can send requests to it from all over the globe. Testing was performed using GCP VMs running k6 load testing tool, which provides an indication of cross-region latency patterns.

Test VMs were created in the following regions:

- asia-east1-a (n1-standard-4)

- europe-west1-b (n1-standard-4)

- us-east1 (n1-standard-4)

- us-central1 (n1-standard-4)

The k6 test script made POST requests to the Topology Service’s /v1/classify endpoint with a single virtual user over 30 seconds.

K6s Latency Testing Methodology

See this Comment for further details

import http from 'k6/http';

import { check } from 'k6';

export const options = {

vus: 1, // Number of virtual users

duration: '30s', // Test duration

};

export default function() {

const url = 'https://topology-rest.runway.gitlab.net/v1/classify';

const payload = JSON.stringify({

type: "CELL_ID",

value: "2"

});

const params = {

headers: {

'Content-Type': 'application/json',

},

};

const response = http.post(url, payload, params);

// Check if the request was successful

check(response, {

'status is 200': (r) => r.status === 200,

});

}

Results

Baseline Configuration (us-east1/us-central1 deployment):

| Run | us-east1 | europe-west1-b | asia-east1-c |

|---|---|---|---|

| 1 | 11.57ms | 105.69ms | 182.41ms |

| 2 | 11.27ms | 106.12ms | 188.10ms |

| 3 | 12.04ms | 105.12ms | 182.79ms |

| Avg | 11.63ms | 105.64ms | 184.43ms |

Multi-Regional Configuration (us-east1/us-central1/europe-west1/asia-east1 deployment):

| Run | us-east1 | europe-west1 | asia-east1 | us-central1 |

|---|---|---|---|---|

| 1 | 14.35ms | 17.59ms | 11.48ms | 31.30ms |

| 2 | 14.82ms | 18.12ms | 11.23ms | 32.87ms |

| 3 | 13.92ms | 17.21ms | 11.79ms | 30.76ms |

| Avg | 14.36ms | 17.64ms | 11.50ms | 31.64ms |

Analysis

The latency testing reveals significant improvements for international regions when using multi-regional deployment:

- Europe latency improvement: 83% reduction (105.64ms → 17.64ms)

- Asia latency improvement: 94% reduction (184.43ms → 11.50ms)

- US East Coast: Slight increase (11.63ms → 14.36ms) due to distributed routing

These results demonstrate that while our proposed nam11 configuration serves North American users well, there would be substantial performance benefits for future EU or Asia-based deployments if we migrate to nam-eur-asia3.

Server Side Latency

Spanner uses Paxos for replication. In multi-regional a successful write means that it’s done on both regions.

Both regions need to have low latency between them to speed up the writes.

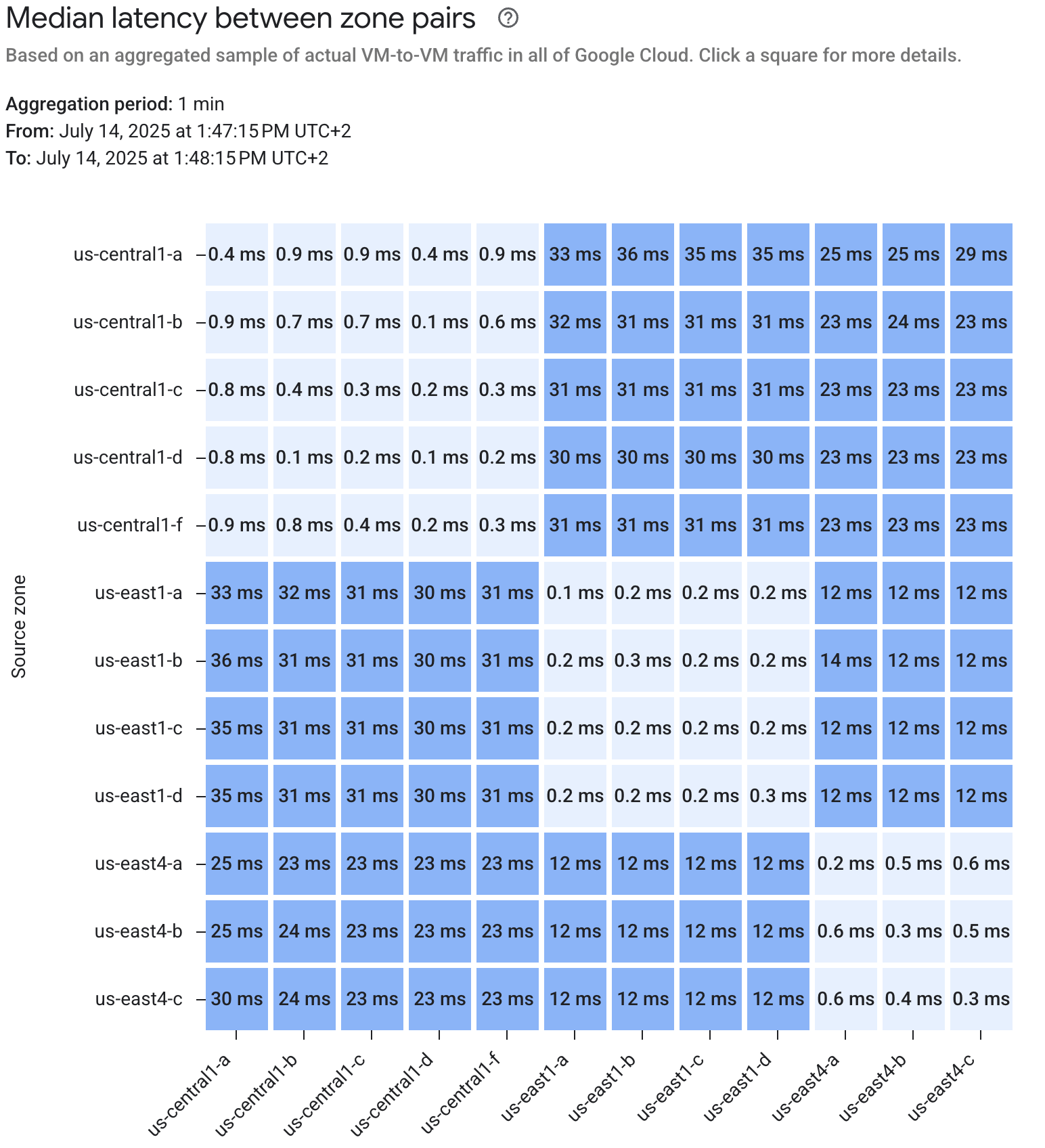

The Performance Dashboard below shows that the least amount of latency is

between us-east1 and us-east4 which are the regions for nam3.

Decision

We will base our instance configuration off nam3 with the following

modification:

us-east1as primary instead ofus-east4: Our primary region isus-east1- Additional Read-only replicas: HTTP Router is globally distributed this means Topology service also needs to be, to improve latency for classification. This means we need additional read-only replicas.

| Read-Write Regions | Read-Only Regions | Witness Region | Optional Read Only Region |

|---|---|---|---|

us-east1 (primary), us-east4 |

us-west2, asia-southeast1, europe-west1 |

us-central1 | asia-southeast2, europe-west2 |

We will rely on Google’s default encryption for data at rest, which is automatically enabled, rather than implementing Customer-Managed Encryption Keys (CMEK).

Consequences

When we change the topology of Spanner this will trigger an instance move. Using terraform can result into data loss since it will re-create the instance, but if we add read-replicas right before we add Legacy Cell to Cells Cluster there would be no data loss.

Benefits

- Reduced Costs up until we need it: We can start small and expand while not changing the read-write topology.

- Least server side latency for writes.

- Ability to expand to other contents like Europe and Asia for client side latency.

- Less maintenance overhead with encryption keys compared to CMEK

Alternatives Considered

Alternative 1: nam-eur-asia3

Context: Read-write in us-east1 and us-central1 with out-of-the-box

read-only regions.

Decision: Not ideal for server side latency, and high cost from the start with little regional expandability.

Alternative 2: nam11

Context: Read-write in us-east1 and us-central1 with a single optional

read-only regions.

Decision: Not ideal for server side latency with little regional expandability.

Cost Analysis

| Configuration | Compute | Replication | Total Cost |

|---|---|---|---|

| nam3 | $12,138.60 | $888.18 | $13,026.78 |

| nam11 | $11,702.50 | $888.18 | $12,590.68 |

| nam-eur-asia3 | $20,751.58 | $1937.12 | $22,688.70 |

This estimate is based on:

- 5 nodes of compute capacity

- 1 TB of SSD storage

- 10 GB of data written/modified per hour

- Enterprise Plus Edition

133a6d4f)