Designing your survey

Designing a survey isn’t easy. It requires thoughtful planning to make sure the flow of the survey is easy to follow, the questions are clear to the respondent, and that the output will yield accurate data.

Here are some of the more important tips to consider when designing a survey:

-

Only include survey questions that directly relate to your research questions. It can be exciting to learn about your users and survey writers have a tendency to include questions that they are curious about, but that won’t impact their product decisions or planning directly. Best practice is to start with a planning table where you list your research questions in one column, the survey questions that you’ll use to answer your research questions in another one, and what you plan to do with your data in a third column. This will help you to think through whether or not your questions are nice-to-have or necessary in order for you to answer your research questions.

-

Ensure the questions are clear and cannot be easily confused. Since surveys are unmoderated, you won’t be there to provide clarification. That means that your survey and accompanying questions need to be simple and clear to your respondents. A great way to determine this is by piloting a survey through UserTesting.com, where you can watch respondents think aloud while taking your survey. It’s also ok to provide definitions to terms when it makes sense.

-

Use neutral language. Avoid positively or negatively worded questions to reduce any bias in your results. You’ll be surprised how easy it can be to inadvertently write your questions as leading.

-

Make sure each question covers a single topic. Avoid double-barreled questions by breaking out questions that are really 2+ questions in 1. Not only are double-barreled questions difficult to answer for respondents, they’re hard to analyze and act upon.

-

Use standard survey questions. Luckily, surveys have been around for quite some time, resulting in standardized questions that are commonly used in the industry such as the System Usability Scale. Before spending time designing a survey, check to see if there are standardized survey questions available that may help in answering your research questions. If so, use those rather than reinventing the wheel.

-

Avoid too many open-ended questions. While you may be tempted to ask many open-ended questions within a survey, there are some factors to consider:

- Limited detailed data - The data you obtain through open-ended questions may result in limited detail, and it certainly won’t be as rich as data obtained from a user interview. In short, respondents sometimes aren’t willing to go into detail when typing out responses.

- Left with more questions than answers - Related to the above, you may not end up with the answers you’re looking for if you rely on open-ended survey questions to answer complex research questions.

- Open-ended questions are often skipped - Filling out an open-ended survey question takes time and can be viewed as an annoyance by respondents. Because of that, they’re often skipped.

- Fatigue - Too many open-ended questions can quickly lead to fatigue, resulting in survey abandonment.

Now, sometimes it’s necessary to ask open-ended survey questions. When doing so, just be mindful of the above factors, and explore other survey question types first. If you end up with a lot of open-ended questions, reconsider your methodology. It may be more appropriate to start with an interview, and then run a survey to get a larger volume of answers.

-

Avoid asking questions that force the respondent to estimate because it’s hard to estimate accurately. What helps is to provide a designated time frame when asking common questions, like “How many times have you XYZ?” Without a scoped time frame, each participant will come up with their own and provide an estimate. Instead, be crisp about a scoped timeframe for the user to think back about. This will result in more accurate data across a consistent time frame.

-

Make sure the survey flows well. Ask yourself if the ordering of the questions makes sense. Questions about the same topic should be grouped together to avoid context switching. A best practice is to start with your most important questions at the beginning of the survey, in case respondents drop off.

-

Think about any built-in logic you’re using. Using logic can help make a survey cleaner, appear shorter, and add some useful capabilities. Logic can sometimes negatively affect the flow by negatively altering it, so make sure the flow makes sense for every logic-based scenario.

-

Don’t make all the questions required. You may think that all questions should be required, since they’re all important to you. Doing so could result in survey abandonment or inaccurate data on some questions that respondents would otherwise like to skip. Only flag required questions that are critical to your research questions and/or survey logic.

-

Give respondents options that feel right. For multiple-choice questions, provide a way for respondents to answer with ‘other’ or ’not applicable’. This is so you don’t force respondents to answer a question inaccurately, which can result in inaccurate data. A bonus: you may learn something about your respondents if you see a significant amount of them answering with ‘other’ or ’not applicable’.

-

Keep it short. Respondents tend to complete shorter surveys faster than longer surveys. If your survey is more than 20 questions, it’s probably too long. It’s strongly advised to keep the scope of the survey to only answer the research questions, and eliminate any ’nice-to-have’ questions. You’ll be surprised at how often ’nice-to-have’ questions will try to make their way into your survey. If you don’t have an immediate need for the data of a given survey question, eliminate it. You’ll save yourself a lot of time in the analysis, too.

-

Let respondents know how much is left. It’s important to let respondents know how much of the survey is left to complete. You can do this by showing a progress meter or a percent complete indicator that’s already built into the survey tool you use.

-

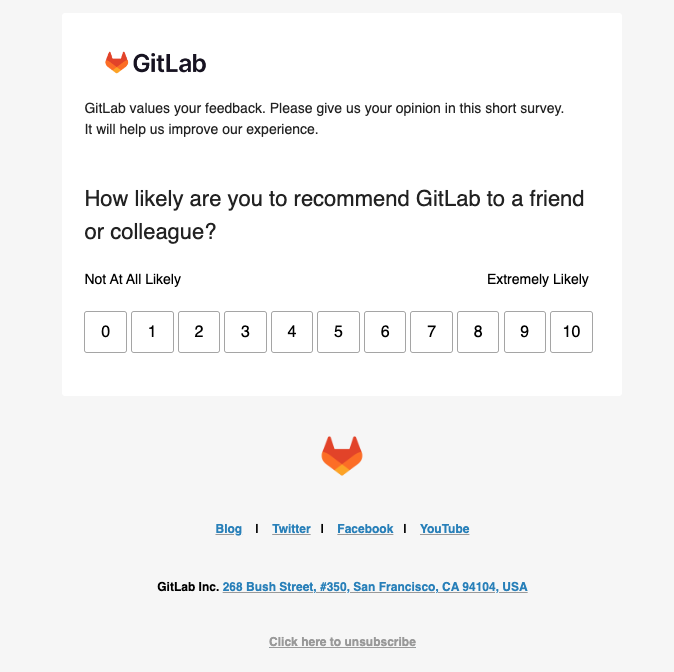

Think carefully before using an embedded survey question in an email. Consider the potential advantages and drawbacks of embedding survey questions directly into email distributions. While it may offer convenience and increased visibility, it can also present challenges such as response bias and limited formatting options. For instance, in our previous experience with embedded surveys in our paid NPS surveys, despite achieving a good response rate, we encountered a notable increase in one-click detractors (respondents who selected the answer to the question in the email, but did not complete any other questions within the remaining survey questions). This could indicate a tendency for respondents to provide quick, reflexive responses without fully considering their feedback, possibly due to the ease of responding within the email.

Here is an example of an embedded survey question:

46417d02)