GitLab Dedicated Logs

Working with logs

Support can access GitLab Dedicated tenant logs through our OpenSearch infrastructure. See Accessing logs to get started. OpenSearch can be used like Kibana but read about searching logs for information on the differences.

When working on a GitLab Dedicated ticket, prioritize asking for information that will help identify applicable log entries. It is best to start collecting this information as early in the ticket as possible. The specific kinds of information will vary depending on the problem you are trying to solve but username, project path, project ID, exact date and time with time zone, correlation ID and outgoing IP address are all good examples.

The logs in OpenSearch will all be presented in the UTC time zone, regardless of the customer’s time zone.

Tagging logs while running tests

Customers can add a custom identifier, such as the ticket ID, to the user-agent field when testing. This makes it easier to filter logs related to the test.

For example:

curl -k -vvv -A"GitLabSupport012345" "https://tenant.gitlab-dedicated.com/users/sign_in"

Preprod deployments

Use the GitLab Dedicated Preprod switchboard to find links to Opensearch logs for a specific customer’s Preprod environment, when applicable.

Identifying tenants

Each customer has a dedicated set of credentials needed for examining logs in OpenSearch. The credentials and the URL for that customer’s OpenSearch instance are stored in the GitLab Dedicated - Support 1Password vault. Each customer is noted by a three word Internal reference in the vault, so you must refer to the <tenant name> to identify the proper credentials to use for a customer. This is used as part of the accessible URL, such as: opensearch.<tenant name>.gitlab-dedicated.com. You should use Switchboard as the single source of truth for identifying the Internal reference for a tenant based on the GitLab Dedicated instance URL.

Accessing logs

To access the logs for a specific tenant find the credentials stored in the GitLab Dedicated - Support Vault, and access the corresponding tenant URL listed there.

Once in the tenant’s OpenSearch site:

- Select “Global” tenant

- Choose “Discover” at the sidebar under OpenSearch Dashboards

- On the next screen, you should see logs. Make sure that index

gitlab-*is selected.

It is recommended to start with the gitlab-* index because it has a timestamp field. It shows a useful skyline graph and allows for time-filtering. The git* index is less useful as it does not have a timestamp field defined/used. If you are unable to see the logs, try clearing cookies, local storage, and all session data for the site and repeat the steps above.

Logs are retained for 7 days in OpenSearch; retention is longer in S3, but these are not accessible to Support. If you’re working on a ticket where access to older logs would have been helpful, please flag it via the Support::SaaS::Log retention period reached macro (This is an internal macro for tracking purposes only). Copy and paste relevant log entries or screenshots of frequently occurring errors into an internal note in the ticket or a field note in order to preserve them beyond the retention period.

Sharing logs

Logs generated by the GitLab application can be shared directly with customers via a ticket. Logs that were not generated by the GitLab application can not be shared with customers. You can determine whether a log entry was generated by the GitLab application by checking:

- Is the

kubernetes.container_nameone of the following?:gitlab-shellgitlab-workhorsekasregistrysidekiqwebservice

If yes: the log entry can be shared directly with the customer via the ticket.

- Does the

fluentd_taghave a value ofgitaly.app?

If yes: the log entry can be shared directly with the customer via the ticket.

If one of the criteria above are not met, the log entry should not be shared directly with the customer by default. If you think sharing the log entry would benefit the customer, please read Sharing internal logs, data & graphs.

GitLab Dedicated customers can request access to application logs.

Log requests older than 7 days

If the customer requests logs for a period older than 7 days, a security issue should be created. Follow the same procedure as the Security - log request workflow.

Granting customers access to application logs

Customers may request access to their logs stored in a AWS S3 bucket to monitor their instance.

-

In the ticket, ask the customer to provide the required information. In this case, it’s an IAM principal.

- The IAM principal must be an IAM role principal or IAM user principal.

-

Open a Request for Help issue in the GitLab Dedicated issue tracker.

-

Provide the IAM principal to the Environment Automation team.

-

Provide the name of the S3 bucket to the customer.

Sharing log links within GitLab

When sharing log links with other GitLab team members, be sure to generate a Permalink. You can do this by following these steps:

- Use filters to find the log entries you are interested in

- Click Share in the upper right corner

- Optional Slide the Short URL toggle

- Click Copy link

Share the link that is copied rather than the URL in your browser bar.

Log Identification

Not sure what to look for? Consider using a Self-Managed instance to replicate the bug/action you’re investigating. This will allow you to confirm whether or not an issue is specific to the specific GitLab Dedicated tenant, while also providing easily accessible logs to reference while searching through OpenSearch.

Support Engineers looking to configure a Self-Managed instance should review our Sandbox Cloud page for a list of company-provided hosting options.

Table vs JSON

Each entry in OpenSearch can be expanded to show more information by clicking the > icon. The Expanded document view has two tabs: Table and JSON. The Table will be shown by default. If you don’t see the information you are looking for when viewing Table, click JSON. In the JSON view, you’ll see a prettified version of the JSON content of the log entry. You can copy the JSON and parse it locally with tools like jq or jless.

Searching logs

Since GitLab Dedicated uses Cloud Native Hybrid reference architecture, searching logs on OpenSearch is a bit different from Kibana.

- In OpenSearch, terms can be freely typed in the search bar.

- By comparison, freely typing in the search bar is discouraged in Kibana.

- Fields can also be used as filters, similarly to Kibana.

Fields and Filters

The fields and filters available in OpenSearch can help you to find log entries more easily.

Fields

General fields:

host:The GitLab host of the log. It can be<tenant name>-gitaly-*or<tenant name>-consul-2, etc.referrer:holds the project path.https://tenant.gitlab-dedicated.com/example-group/test123path:The portion of the URL after the tenant hostname that can provide useful information about what a particular request was attempting to domessage:is the message that would be seen in the logs of a self-managed instance.xxx.xxx.xxx.xxx - - [08/Jul/2020:13:24:43 +0000] "GET /assets/webpack/commons-pages.projects.show-pages.projects.tree.show.21909065.chunk.js HTTP/1.1" 200 9316 "https://tenant.gitlab-dedicated.com/example-group/test123" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/102.0.5005.63 Safari/537.36" 1343 0.001 [default-gitlab-webservice-default-8181] [] xxx.xxx.xxx.xxx:8181 9309 0.000 200 fe130eac78314cwf352g3762397572cbsubcomponent: The values in this field correspond to entries in GitLab’s log system. Possible values includeproduction_json,application_json,api_json,auth_jsonandgraphql_json. You can use filters to collect all log entries associated with a specific subcomponent.

Gitaly related fields:

grpc.request.glProjectPath:The actual GitLab path project path.grpc.request.repoPath:Project hash id path.grpc.request.repoStorage:Which Gitaly storage houses the repogrpc.method:The name of the gRPC methodgrpc.request.fullMethod:The fully qualified name gRPC method, includes the service and method name

SAML related fields:

action:samlpath: /users/auth/saml/callbackcontroller: OmniauthCallbacksControllerlocation: https://tenant.gitlab-dedicated.com/

Filters

Filters are a powerful way to identify the log entries you care about.

Creating a filter

You can create a filter in OpenSearch by following these steps after you browse to OpenSearch Dashboards > Discover:

- Select Add filter

- Click Select a field first in the Field drop-down

- Click the Field you want (scroll to it or type to find it faster)

- Select an Operator

- In the Value field, add the string you want to filter for

To start, use the is Operator. This will let you filter for all log entries where a Field like status is 418. There are some example useful filters that you can use to help craft a filter.

If you don’t get the results you expect and you are sure that your filter is correct: make sure that the date range is correct.

Useful filters

-

kubernetes.labels.app:used to filter Kubernetes pods.nginx-ingress,webservice, etc. -

correlation_id: used to find relevant log entries by correlation ID Use this OpenSearch filter to find logs related to the GitLab application: -

kubernetes.labels.release: gitlab

Filter by HTTP response status code

These OpenSearch filters can be used to find logs by their HTTP response status codes.

To find logs where the HTTP response status code is 422:

- Field:

status - Operator:

is - Value:

422

To find all logs where the HTTP response status code is in the 4xx client error class:

- Field:

status - Operator:

is between - Start of the rage:

400 - End of the range:

499

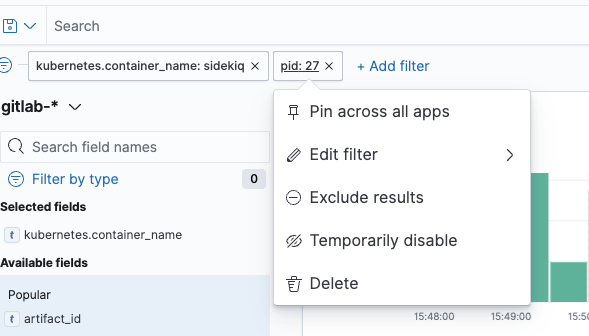

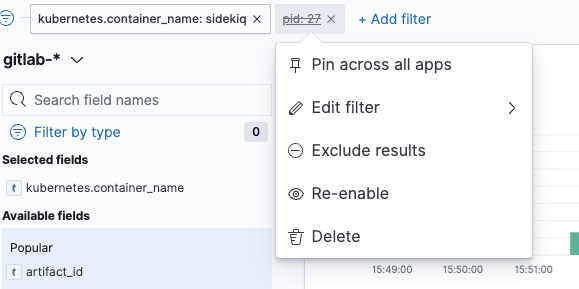

Disabling and re-enabling filters

It can be useful to temporarily disable a filter to change the view of logs.

To temporarily disable a filter, click the text of the filter to get a menu of options, and select Temporarily disable.

To re-enable a filter, click the text of the filter and select Re-enable.

Creating indexes for fields

Sometimes an OpenSearch field will not be indexed and you will not be able to filter on it. This is indicated by a warning triangle with a ! next to the value for that field.

To refresh the mappings and update the indexes you can (using the Support login):

- Open the hamburger menu (top left)

- Click

Stack Management(Managementsection right at the bottom) - Click

Index Patterns - Click on the pattern you want to refresh

- Click the “refresh” (circular arrow) on the top right, between the star and trash can

- Click

Refreshin the dialog

The Fields count will go up.

You can now go back to the log search page and filter on the field.

Examples

Filter by correlation ID

GitLab instances log a unique request tracking ID (known as the “correlation ID”) for most requests. An important part of troubleshooting problems in GitLab is finding relevant log entries with a correlation ID. Opensearch permits filtering by correlation ID. You may retrieve the correlation ID from information provided by the customer or from looking through Opensearch logs.

To show all log entries for a specific correlation ID, you can:

- Select Add filter

- Click Select a field first

- Choose

correlation_id - In the Operator drop-down, select

is - In the Value field, put the correlation ID

Identify a deleted group or project

Information about deleted groups and projects is available in the Audit Events. The customer should be able to review this information in the Admin Area. Provided the deletion occurred within the log retention window, additional information can be sought in OpenSearch. In order to identify more information about a deleted project or group in OpenSearch, you can use this information to guide the filters that you use.

- Select Add filter

- Click Select a field first

- Choose

meta.caller_id - In the Operator drop-down, select

is - In the Value, add

GroupsController#destroy

You can look at the values in username, user_id (or meta.user_id) to get more information about the user that issued the deletion request.

Identify a created or deleted user

Information about a user being created or deleted is available in the Audit Events. The customer should be able to review this information in the Admin Area. Provided the user was created or deleted within the log retention window, information about these events can be sought in OpenSearch. In order to identify more information about a created or deleted user, you can use this information to guide the filters that you want to use. For these events, we are interested in RegistrationsController.

- Select Add filter

- Click Select a field first

- Choose

controller - In the Operator drop-down, select

is - In the Value box, add

RegistrationsController - Click Save

You will now have a list of user creation and deletion events. Use the action field to look for the kinds of events you are interested in.

Find information about a specific pipeline

Once you know the ID of a specific pipeline, you can get information from the logs about how that pipeline was processed. Given the role that Sidekiq plays in processing CI pipelines, let’s check the Sidekiq logs.

In this example, we’ll use two filters: one to get the logs from the sidekiq container and another to filter on the specific pipeline ID.

Add the first filter for all of the logs from the sidekiq container:

- Select Add filter

- Click Select a field first

- Choose

kubernetes.container_name - In the Operator drop-down, select

is - In Value box, add

sidekiq - Click Save

Add the second filter so that you have all of the sidekiq logs about the specific pipeline:

- Select Add filter

- Click Select a field first

- Choose

meta.pipeline_id - In the Operator drop-down, select

is - In the Value box, add the pipeline ID for the pipeline that you care about

- Click Save

You can now read through the Sidekiq logs related to that specific pipeline.

Identify who viewed CI/CD Variables

While we do not specifically log changes made to CI/CD variables in our audit logs for group events, there is a way to use Kibana to see who may have viewed the variables page. Viewing the variables page is required to change the variables in question. While this does not necessarily indicate someone who has viewed the page in question has made changes to the variables, it should help to narrow down the list of potential users who could have done so. (If you’d like us to log these changes, we have an issue open here to collect your comments.)

- Select Add filter

- Click Select a field first

- Choose

controller - In the Operator drop-down, select

is - In the Value box, add

Projects::VariablesController - Click Save

You now have a list of logs to sort through. You can get a bit more information about the users involved by refining the query a bit further. In the Search field names box, type username. Click the + symbol to Add field as column. In the list of logs, you’ll now see the username associated with each log entry where the CI/CD variables were viewed.

Retrieve and inspect SAML Responses

When troubleshooting unexpected behavior in instance-wide SAML single sign on (SSO) for GitLab Dedicated customers, you can use OpenSearch to retrieve the SAML response.

- Select Add filter

- Click Select a field first

- Choose

controller - In the Operator drop-down, select

is - In the Value box, add

OmniauthCallbacksController - Click Save

As you adjust the date range appropriately, you should have a list of logs to look through. You can get a bit more information about these authentication attempts by refining the query a bit further. In the Search field names box, type action. Click the + symbol to Add field as column. In the list of logs, you’ll now see the action associated with each authentication attempt. The action field will indicate whether the authentication attempt resulted in a failure.

You may wish to inspect an individual SAML response. When you have identified the log entry that includes the SAML response you wish to inspect, click the right arrow to view the Expanded document.

- In the

paramssection, you’ll see a JSON object that has a key calledSAMLResponse(The data in thevalueis the base64-encoded SAML response.)- Sometimes this key is too large so the log will only contain the value

truncated. In this case, you will need to ask the customer to capture the SAML response themselves and send it to you.

- Sometimes this key is too large so the log will only contain the value

- Save the long string in the

valuefield (it should end with=) to a file likeresponse.txt - Decode the base64-encoded value with

base64 -d response.txt.- To make inspection easier, write the output of the base64 decode into an XML file, for example

base64 -d response.txt > /tmp/samlresponse.xml. - This file can be directly opened in some browsers like Firefox and Google Chrome to make it more readable.

- To make inspection easier, write the output of the base64 decode into an XML file, for example

Read more about what to look for in the SAML response.

Debug a failed global search request

If a search request fails, it will likely throw an error in the UI with a status code. For such failures, you can find more logs with the following steps, using an example of an error 500:

- Select Add filter

- Click Select a field first

- Choose

status - In the Operator drop-down, select

is - In the Value box, add

500 - Add another filter for the failed search term. Choose

uri - In the Operator drop-down, select

isand in Value box add/search?search=test - Click Save

You can then filter by correlation_id only, to select the failed occurrence. Take note of the exact time at @timestamp to use in the next filter.

- Start a new search on a duplicated Opensearch tab.

- Noting the timestamp from above, copy the value of

kubernetes.hostand filter for logs within that@timestampframe. - Fine-tune the results by adding more filters such as, Filter:

messageOperator:is one ofValue:elasticsearchto see any logs with the term elasticsearch

Read more on troubleshooting Elasticsearch for potential next steps.

Debug Hosted Runners for GitLab Dedicated

To debug tickets about Hosted Runners for GitLab Dedicated, verify that the customer is using Hosted Runners. Refer to the Hosted Runners for GitLab Dedicated documentation page to view OpenSearch filters you can use to filter these logs.

Debug Duo related errors

If a Duo chat feature fails, the customer will most likely get an error code on the UI from one of the listed codes in the documentation.

To gather more logs on the actual cause of the failure, first filter for the Duo chat code the customer provided using the duo_chat_error_code field.

You can then review the values in ai_component, ai_event_name, class, error, and message to gather more information on the actual error message.

3643eb9e)