Production Architecture

This is a Controlled Document

In line with GitLab’s regulatory obligations, changes to controlled documents must be approved or merged by a code owner. All contributions are welcome and encouraged.Our GitLab.com core infrastructure is primarily hosted in Google Cloud Platform’s (GCP) us-east1 region (see Regions and Zones).

This document does not cover servers that are not integral to the public facing operations of GitLab.com.

Purpose

This page is our document that captures an overview of the production architecture for GitLab.com.

Scope

The compute and network layout that runs GitLab.com

Roles and Responsibilities

| Role | Responsibility |

|---|---|

| Infrastructure Team | Responsible for configuration and management |

| Infrastructure Management (Code Owners) | Responsible for approving significant changes and exceptions to this procedure |

Procedure

Related Pages

- Application Architecture documentation

- GitLab.com Settings

- GitLab.com Rate Limits

- Monitoring of GitLab.com

- GitLab performance monitoring documentation

- Performance of the Application

- Gemnasium Service Production Architecture

- CI Service Architecture

- dev.gitlab.org Architecture

- ops.gitlab.net Architecture

- version.gitlab.com Architecture

Current Architecture

GitLab.com Production Architecture

Source, GitLab internal use only.

Most of GitLab.com is deployed on Kubernetes using GitLab cloud native helm chart. There are a few exceptions for this

which are mainly the datastore services like PostgresSQL, Gitaly, Redis, Elasticsearch.

Cluster Configuration

GitLab.com uses 4 Kubernetes clusters for production with similarly configured clusters for staging.

One cluster is a Regional cluster in the us-east1 region, and the remaining three are zonal clusters that correspond to GCP availability zones us-east1-b, us-east1-c and us-east1-d.

The reasons for having multiple clusters are as follows:

- Ensure that high-bandwidth services do not send network traffic across zones.

- Isolation of workloads

- Isolation of maintenance changes and upgrades to the clusters

For more information on why we chose to split traffic into multiple zonal clusters see this issue exploring alternatives to the single regional cluster. A single regional cluster is also used for services like Sidekiq and Kas that do not have a high bandwidth requirement and services that are a better fit for a regional deployment.

In keeping with GitLab’s value of transparency, all of the Kubernetes cluster configuration for GitLab.com is public, including infrastructure and configuration.

The following projects are used to manage the installation:

- k8s-workloads/gitlab-com: Contains the GitLab.com configuration for the GitLab helm chart.

- k8s-workloads/gitlab-helmfiles: Contains the configuration cluster logging, monitoring and integrations.

- argocd/apps: Contains the Applications for all services for ArgoCD.

- argocd/config: Contains the top-level Applications, AppProjects, Repositories, cluster inventory and RBAC configuration for ArgoCD.

- config-mgmt: Terraform configuration for the cluster, all resources necessary to run the cluster are configured here including the cluster, node pools, service accounts and IP address reservations.

- charts: Charts created by the infrastructure department to deploy services that don’t have community charts.

All inbound web, git http, and git ssh requests are received at Cloudflare which has HAProxy as an origin. For Sidekiq, multiple pods are configured for Sidekiq cluster to divide Sidekiq queues into different resource groups. See the chart documention for Sidekiq for more details.

Monitoring and Logging

Monitoring for GitLab.com runs in the same cluster as the application. Metrics are aggregated in the ops cluster using Mimir, which we interface with via Grafana that has multiple components.

Prometheus is configured using the kube-prometheus-stack helm chart in the namespace monitoring, and every cluster has its own Prometheus which gives us some sharding for metrics.

Source, GitLab internal use only

Alerting for the cluster uses generated rules that feed up to our overall SLA for the platform.

Logging is configured using tanka where the logs for every pod is forwarded to a unique Elasticsearch index. fluentd is deployed in the namespace logging.

Cluster Configuration Updates

There is a single namespace gitlab that is used exclusively for the GitLab application.

Chart configuration updates are set in the gitlab-com k8s-workloads project where there are yaml configuration files that set defaults for the GitLab.com environment with per-environment overrides.

Changes to this configuration are applied by the SRE and Delivery team after a review using a MR review workflow.

When a change is approved on GitLab.com the pipeline that applies the change is run on a separate operations environment to ensure that configuration updates do not depend on the availability of the production environment.

For namespaces in the cluster for other services like logging, monitoring, etc. a similar GitOps workflow is followed using the gitlab-helmfiles and tanka-deployments.

GitLab.com does not depend on itself when pulling images utilized in our Kubernetes clusters. Instead, we utilize our dev.gitlab.org container registry for CNG images. This is to ensure that during an incident, we will still maintain the ability to pull images and run our applications as necessary. For any image that we do not build ourselves, these may be pulled from Docker Hub. Conveniently, these images are mirrored on Google’s Container Registry product. Our GKE nodes are configured from the start with this mirror already in place providing further redundancy in the event that the Docker Hub is unavailable.

Database Architecture

Source, GitLab internal use only

Redis Architecture

Source, GitLab internal use only

Source, GitLab internal use only

GitLab.com uses several Redis shards for various use cases such as caching, rate-limiting, Sidekiq queueing. More info on various Redis shards, their configuration, and usage can be found in the chef-repo and GitLab. The relationship between Redis instances and GitLab deployments can be tracked via this Grafana link.

When needed we also sometimes deal with CPU saturation by making application changes. Some of the techniques for this are discussed in this video.

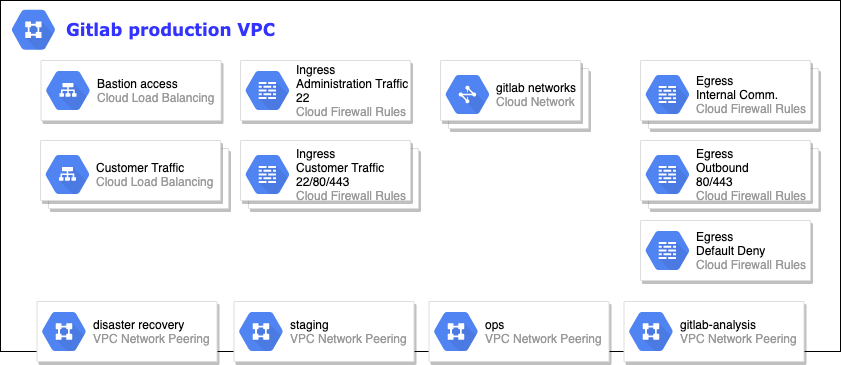

Network Architecture

Source, GitLab internal use only

Our network infrastructure consists of networks for each class of server as defined in the Current Architecture diagram. Each network contains a similar ruleset as defined above.

We currently peer our ops network. Inside of this network is most of our monitoring infrastructure where we allow InfluxDB and Prometheus data to flow in order to populate our metrics systems.

For alert management, we peer all of our networks together such that we have a cluster of alert managers to ensure we get alerts out no matter a potential failure of an environment.

No application or customer data flows through these network peers.

DNS & WAF

GitLab leverages Cloudflare’s Web Application Firewall (WAF). We host our Domain Name Service (DNS) with Cloudflare (gitlab.com, gitlab.net) and Amazon Route 53 (gitlab.io and others). For more information about CloudFlare see the runbook and the architecture overview.

TLD Zones

When it comes to DNS names all services providing GitLab as a service shall be in the gitlab.com domain, ancillary services in the support of GitLab (i.e. Chef, ChatOps, VPN, Logging, Monitoring) shall be in the gitlab.net domain.

Remote Access

Access is granted to only those whom need access to production through bastion hosts. Instructions for configuring access through bastions are found in the bastion runbook.

Secrets Management

GitLab utilizes two different secret management approaches, GKMS for Google Cloud Platform (GCP) services, and Chef Encrypted Data Bags for all other host secrets.

GKMS Secrets

For more information about secret management see the runbook for Chef secrets using GKMS, Chef vault and how we manage secrets in Kubernetes.

Monitoring

See how it’s doing, for more information on that, visit the monitoring handbook.

Exceptions

Exceptions to this architecture policy and design will be tracked in the compliance issue tracker.

References

Disaster Recovery Architecture

Supporting Architecture

011bb7a9)